What is the first step in building any AI/ML model?

It’s defining the problem.

But what comes after?

It’s relevant data gathering or the collection based on the use cases and machine learning methods!

If we extend further on the machine learning methods, some methods, like supervised and semi-supervised learning, use large and small labeled data sets respectively for data classification and accurate predictions, while methods like unsupervised learning, use raw or unlabeled data to train algorithms.

Before starting data collection and labeling in machine learning, there are several things that need to be defined or done:

Clearly define the problem and objectives: Understand the problem you are trying to solve and define the objectives of your model.

Identify the appropriate data sources: Determine where the data will come from and ensure that it is relevant and sufficient for the problem you are trying to solve.

Set up the data collection and annotation process: Define how the data will be collected, cleaned, and labeled, and set up the necessary tools and infrastructure.

Determine the sample size: Estimate the sample size required to achieve statistical significance and to prevent overfitting or underfitting.

Prepare the data: Clean and preprocess the data before feeding it into the model.

Define the evaluation metrics: Identify the metrics that will be used to evaluate the model's performance, such as accuracy, precision, recall, and F1 score.

Decide the type of model: Decide the type of machine learning model that will be used, such as supervised, unsupervised, or reinforcement learning.

Split the data: Split the data into training, validation, and test sets to evaluate the model's performance and prevent overfitting.

Prior to initiating data collection and labeling in the process of developing machine learning models, it is essential to determine the methods for acquiring the necessary data.

These methods include utilizing in-house teams, outsourcing, and crowdsourcing. The step of identifying appropriate data sources will aid in determining the most appropriate method for data collection and labeling.

TL;DR of Data Sourcing Methods

In-house teams: Utilizing internal resources, such as employees or contractors, to collect and label data. This method allows for greater control over the data collection process and ensures that data is collected in accordance with the company's policies and guidelines.

Outsourcing: Hiring a third-party service provider to collect and label data. This method can be useful for specialized data collection tasks or for projects that require a large amount of data.

Crowdsourcing: Collecting data from a large, dispersed group of individuals, such as through online platforms or social media. This method can be useful for collecting large amounts of data quickly and inexpensively, but it may not always be as accurate or reliable as data collected by other methods.

The choice of method will depend on the specific requirements of the project, the available resources and budget, and the quality and reliability of the data required.

Outsourcing data collection and labeling to a specialized service provider is a popular option for many companies. This method can be particularly useful for projects that require a large amount of data or for specialized data collection tasks that require specific expertise.

There are a number of data collection and labeling service providers in the market that offer a range of services such as data annotation, data validation, and data transcription, as well as tools to automate the data collection process. Outsourcing data collection and labeling can help organizations save time and resources while ensuring that data is collected in a reliable and accurate manner.

Introducing FutureBeeAI

An APAC (Asia Pacific) data collection and labeling solutions leader that offers innovative solutions for businesses and organizations looking to improve their machine learning capabilities. At FutureBeeAI, we understand the importance of high-quality and accurate data in driving business success.

Our team of experts is equipped with the knowledge and experience to handle any data collection and labeling project, no matter how complex.

AI/ML developers all over the world trust FutureBeeAI because, with access to state-of-the-art technology and streamlined processes, we are able to deliver high-quality data in a timely and cost-effective manner.

So, what FutureBeeAI can do for you? How it can help with your training data sourcing or labeling goals? Read till the end to find out!

5 Great Reasons to use FutureBeeAI to Supercharge your Data-Sourcing and Annotation Goals

FutureBeeAI offers a comprehensive suite of people, processes, and tools that can assist ML engineers and developers in building their AI/ML models.

#1. Access to Global Crowd Community

According to a study by Businesswire.com, the global data collection crowdsourcing market is expected to grow at a CAGR of 22.43% from 2022 to 2027. This growth is driven by factors such as the increasing demand for data-driven decision-making and the advancement of technology, which make it easier for organizations to access and process large amounts of data.

These statistics highlight the importance and growing trend of crowd-sourcing data collection in today's business scenario. It can help organizations access a larger and more diverse range of data, which in turn can lead to better decision-making, and it is also a cost-effective method that can be used to train Machine Learning models.

Data collection crowdsourcing relies on a network of individuals, known as "crowd workers," to gather information. These individuals can come from a variety of backgrounds and locations, and they are typically recruited and trained to perform specific tasks of data collection, annotation, and labeling.

FutureBeeAI community of 10, 000+ crowd workers based all around the world, with many working remotely from their homes. Their roles can vary depending on the specific task at hand, but they are generally responsible for collecting and inputting data, verifying information, annotations, and providing feedback on the data collected.

Here are a few features of the FutureBeeAI crowd community:

10k+ data

contributors

10k+ data

contributors

3k+ Data

Annotators

3k+ Data

Annotators

2k+

Transcriptionists

2k+

Transcriptionists

Located in 30+

Countries

Located in 30+

Countries

50+ languages

access

50+ languages

access

Includes common

working

professionals to modern tech enthusiasts

Includes common

working

professionals to modern tech enthusiasts

#2. World Class SOPs for Onboarding Crowd

Standard operating procedures (SOPs) are essential for ensuring the success and reliability of a data collection project that involves a global crowd. Here are the SOPs the FutureBeeAI Data Acquisition team has made for onboarding a global crowd in any human-made data sourcing and preparation project:

Explaining the

scope and objectives

of the project clearly and communicating them to the potential participants.

Explaining the

scope and objectives

of the project clearly and communicating them to the potential participants.

Has developed a

clear and detailed

process for recruiting participants, including how to screen and select

them.

Has developed a

clear and detailed

process for recruiting participants, including how to screen and select

them.

Training

participants on the task,

including any necessary background information, instructions, and examples. These training sessions

are produced by the in-house team of reviewers, language experts, and field

experts.

Training

participants on the task,

including any necessary background information, instructions, and examples. These training sessions

are produced by the in-house team of reviewers, language experts, and field

experts.

FutureBeeAI

compensates

participants at fair country rates, safeguarding the diversity and well-being of the

crowd.

FutureBeeAI

compensates

participants at fair country rates, safeguarding the diversity and well-being of the

crowd.

With GDPR

compliance, our data

acquisition team handles personal information and knows how to protect participants'

data.

With GDPR

compliance, our data

acquisition team handles personal information and knows how to protect participants'

data.

With faster

turnaround times and

prompt response rates, our communication and support are rated 5⭐ by many of our renowned

customers.

With faster

turnaround times and

prompt response rates, our communication and support are rated 5⭐ by many of our renowned

customers.

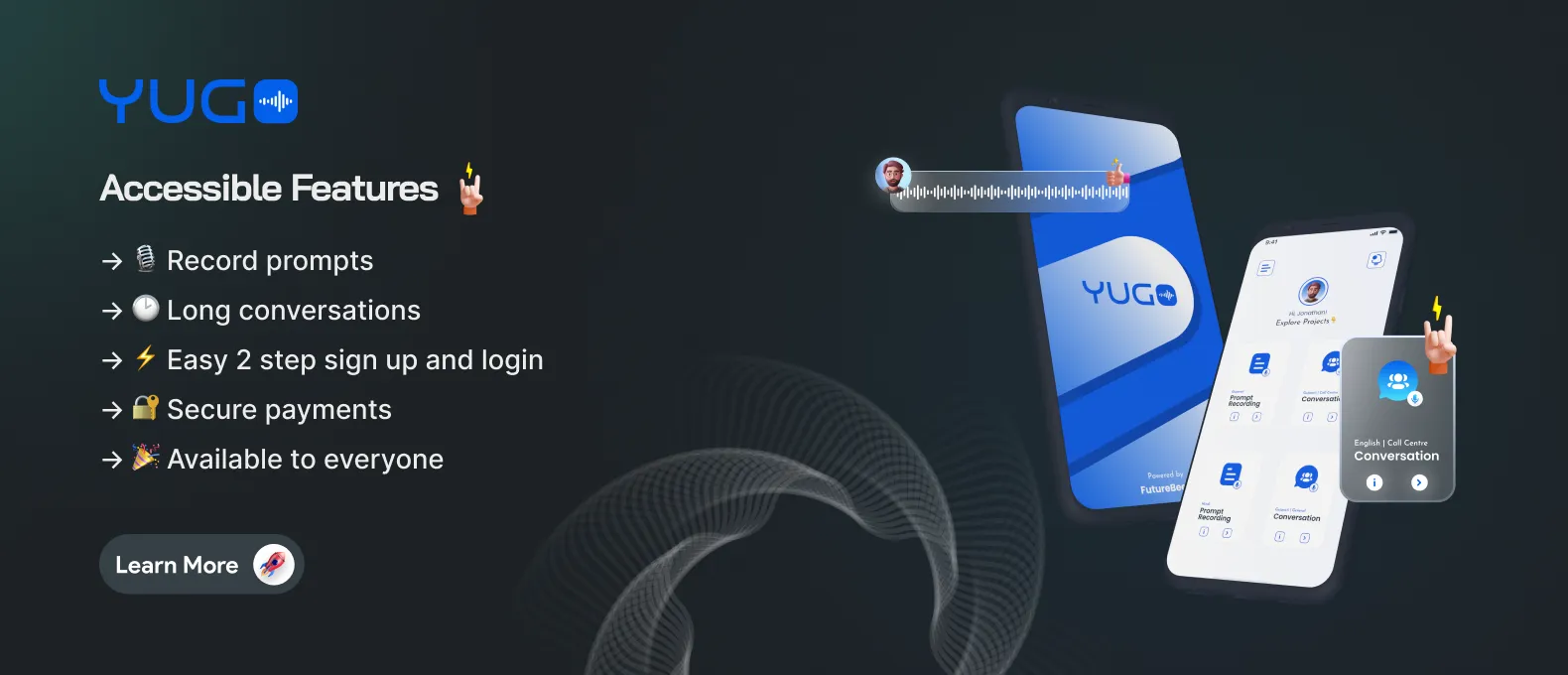

#3. In-house Tools for Data Sourcing

Every data sourcing project has a defined objective about how one can gather the required dataset. You can use many different open-source and freemium plan-based tools. As a developer or a data acquisition manager, you might be aware of some tools or may have your own tool. You should consider your medium for sourcing training data based on factors such as in-house availability or access to third-party tools, as well as the pros and cons.

We recently launched Yugo, our in-house platform for collecting speech datasets. Yugo features:

Seamless scripted monologue recording

Seamless scripted monologue recording

Spontaneous conversation recording between 2 people

Spontaneous conversation recording between 2 people

Separate channel

for both speakers

in conversation recording speech data

Separate channel

for both speakers

in conversation recording speech data

Structured

metadata like age,

gender, and country along with audio and corresponding text file

Structured

metadata like age,

gender, and country along with audio and corresponding text file

Extensive review

phase

Extensive review

phase

#4. 2000+ Ready to Deploy Training Dataset

Have you ever wondered about having all the required training datasets in one place?

If so, then let us introduce you to FutureBeeAI’s training data store 🎉

Everyone in the industry craves the best and most reliable data gathering and preparation solution that they truly desire.

You have reached here which means you understand how crucial structured data is for any artificial intelligence model.

If you are the one who deals with data acquisition, data preparation, and data processing for your AI organization then you must be in constant search of solutions that can assist you with a continuous stream of datasets for your AI model requirement.

FutureBeeAI datastore currently includes

8 different

categories of speech(672),

image(35), and text datasets(685)

8 different

categories of speech(672),

image(35), and text datasets(685)

18

Industries

18

Industries

55 use

cases

55 use

cases

50+

languages

50+

languages

Available in

synthetic and

real-world forms

Available in

synthetic and

real-world forms

Extensive source

of synthetic and

real-world datasets

Extensive source

of synthetic and

real-world datasets

Delivering training dataset in enough volume to train world-class AI innovations and dataset that meets all required standards is our motto since the day we decided to put our steps in the AI/ML domain. FutureBeeAI’s stable client base and our global AI community always fuel us to do more and challenge ourselves toward one common goal, which is humanizing AI.

#5. Data Preparation with Annotations

The data preparation, labeling, or annotation phase in machine learning is a crucial step in the development of any machine learning model. During this phase, raw data is collected, cleaned, and organized in a format that can be used to train a model. This typically involves a process of data preprocessing, that may include tasks such as normalizing or scaling the data, filling in missing values, and removing outliers.

The goal of data preparation and annotation is to create a high-quality, representative training dataset that can be used to train a machine learning model. This dataset should be balanced, meaning it should have a similar distribution of labels, and it should be diverse, meaning it should include examples of all the types of data the model will encounter in production. This will help ensure that the model is able to generalize well to new, unseen data.

Data labeling involves the use of relevant annotation tools that can label objects using desired annotation methods. For example, a self-driving car uses image recognition to identify and respond to traffic signals, pedestrians, and other vehicles on the road. The car's cameras capture images of the environment, and the image recognition algorithm processes the images in real-time to identify and classify the objects within them. Depending on the ML architecture used, labeled data for image recognition can be labeled using a 2D bounding box or polygon annotations.

So, as stated above, these are the reasons you can leverage FutureBeeAI’s 5-finger one-hand approach to achieve your desired model performance.

Learn More

🔗 What is quality AI data collection

🔗 What is AI and how does it comprehend the real world