Hey there! Ever wonder how computers can ‘see’ and understand pictures and videos, just like we do? Well, that's what we call computer vision! In this blog, we're going to explore two important things that help computers 'see': video data and image data.

To teach computers to 'see,' we need to show them lots of pictures and videos. That's where data comes in, it's like a big book of examples that helps computers learn what things look like and how they move.

Let's dive into what video and image datasets are and how they play a big role in making cool things happen!

What is Computer Vision?

Computer vision is like giving eyes to computers, allowing them to understand and interpret the visual world. It's a field of artificial intelligence that focuses on enabling machines to process, analyze, and make decisions based on visual information, much like the way humans perceive and interpret the world through their eyes.

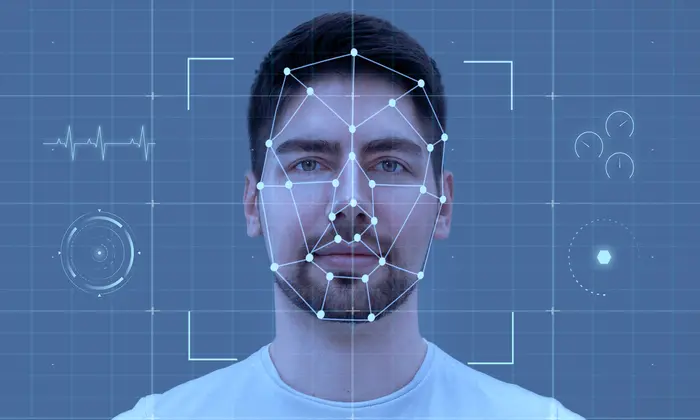

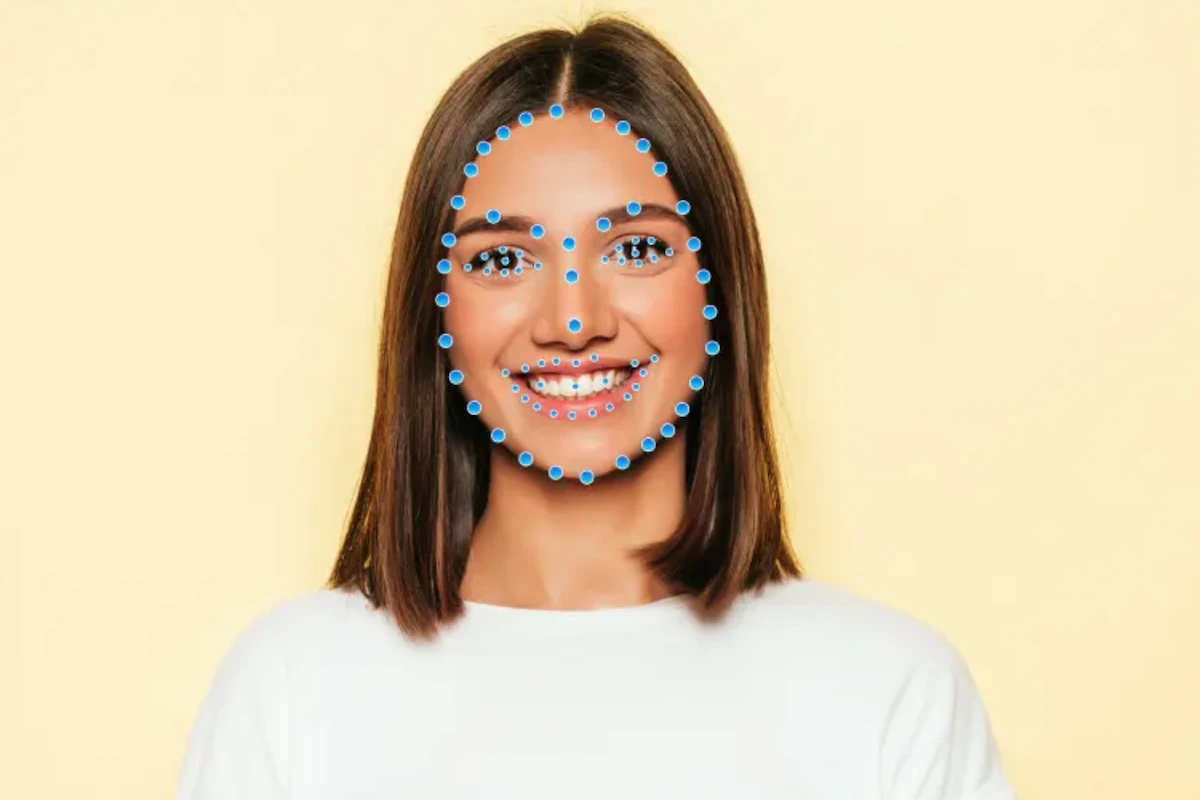

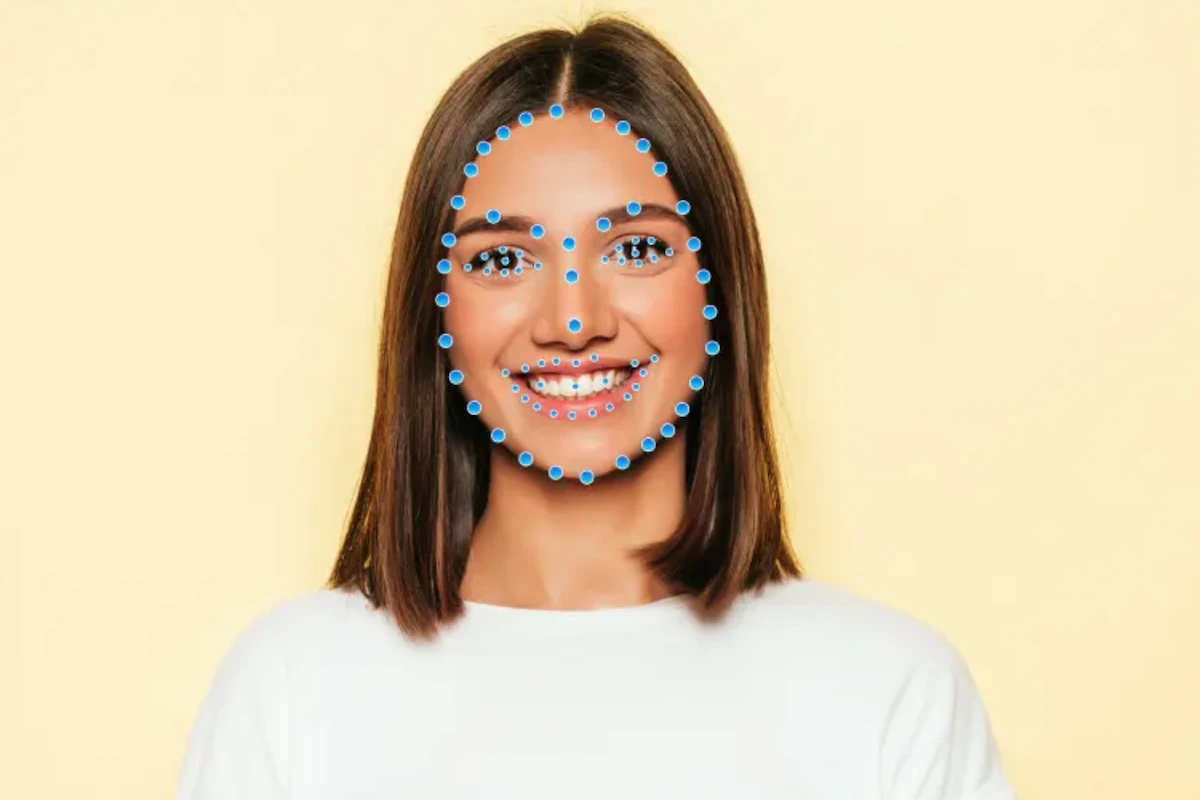

In simpler terms, computer vision involves teaching computers to 'see' and comprehend images and videos. It encompasses various tasks such as recognizing objects, understanding scenes, and even interpreting human gestures and facial expressions. The goal is to enable machines to extract meaningful insights from visual data, making them capable of performing tasks that traditionally required human vision and understanding.

So, the main ingredient here is visual training data.

What is Visual Training Data?

Visual training data refers to a set of images or videos that are used to train and teach computer vision models. These datasets play a crucial role in the development of algorithms for tasks such as image recognition, object detection, facial recognition, and other computer vision applications.

So, to train any computer vision based AI model, we need image training datasets and video training datasets. Also, based on the type of machine learning process and the given use case, we need to label and annotate images and videos.

Each image or video in the dataset is associated with annotations or labels that provide information about what is present in the image or video. For instance, in an image dataset for recognizing cats and dogs, each image would be labeled with information indicating whether it contains a cat or a dog and with this label, we can also implement different types of annotations like 2D bounding box,polygons, etc.

The quality and diversity of visual training data are essential factors in the effectiveness of computer vision models. A well-curated dataset with a broad range of images, representing different scenarios, lighting conditions, and perspectives, helps the model generalize well to real-world situations.

Now that we know that training data is an essential part of building any AI model, different use cases and industries need different kinds of datasets. Let’s discuss some of them in brief.

Visual Training Data for Healthcare

In healthcare, the use of visual training data is crucial for training computer models to understand and interpret medical images. Image datasets consist of a diverse range of X-rays, MRIs, CT scans, and pathology slides, each meticulously labeled to help machines recognize and learn from different medical conditions. These datasets serve as a foundational tool for training algorithms to identify patterns, abnormalities, and nuances within medical images.

By continually refining and expanding these visual training datasets, we enable machines to better comprehend medical imagery, leading to more accurate diagnostics and ultimately enhancing the overall quality of patient care.

In our upcoming blog series, we will discuss different medical training image and video datasets in detail.

Visual Training Data for Manufacturing and Robotics

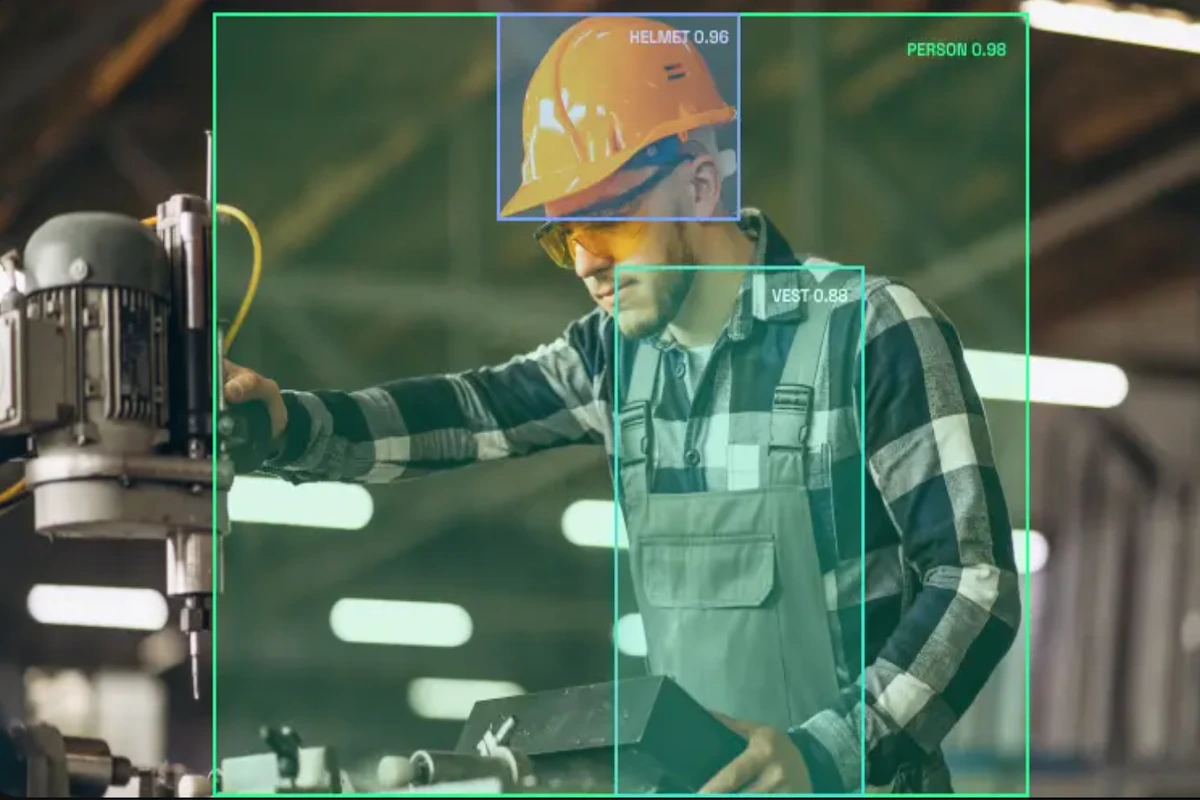

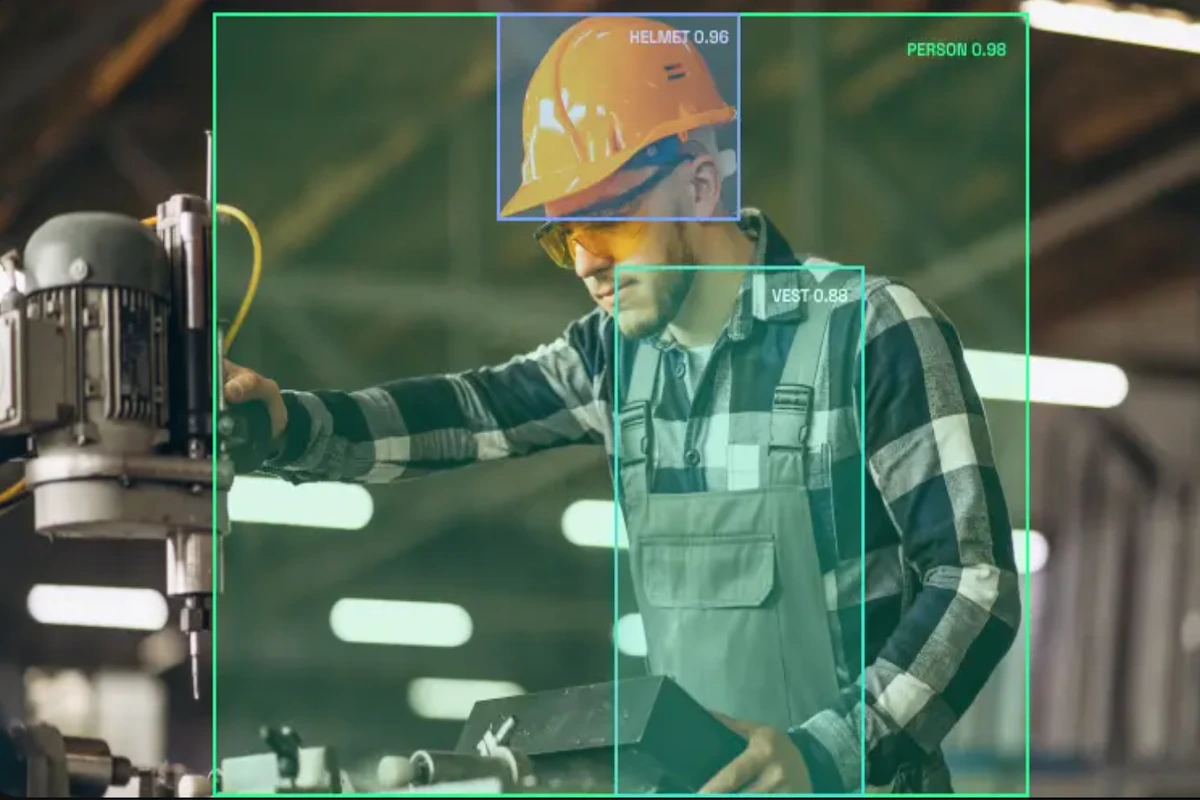

For the manufacturing and robotics industries, visual training data is fundamental for teaching computer models to navigate and understand the complexities of industrial environments. Image datasets in this context include annotated images of manufactured products, aiding in defect detection and quality control. These datasets are essential for training models to recognize variations and anomalies in the production process. Moreover, video datasets capture diverse robotic movements and actions, contributing to the training of robots for tasks such as object manipulation and assembly line operations.

Apart from products related training data, annotated data, including PPE kits and forklifts, can be helpful to get an idea of the overall working environment and the safety of workers.

Visual Training Data for Retail and E-commerce

In the retail and e-commerce sector, visual training data serves as a cornerstone for enhancing computer systems capabilities in understanding and responding to visual information. Image datasets consist of labeled product images, enabling models to categorize items and provide accurate recommendations to users. These datasets play a vital role in training algorithms to recognize diverse products, improving the overall shopping experience.

Additionally, video datasets capture customer behaviors within retail spaces, offering insights into preferences and interactions. This visual training data aids in optimizing checkout processes and minimizing errors. By incorporating these datasets, we empower machines to navigate the visual landscape of retail, offering personalized experiences and streamlining operational processes.

Visual Training Data for Autonomous Vehicles

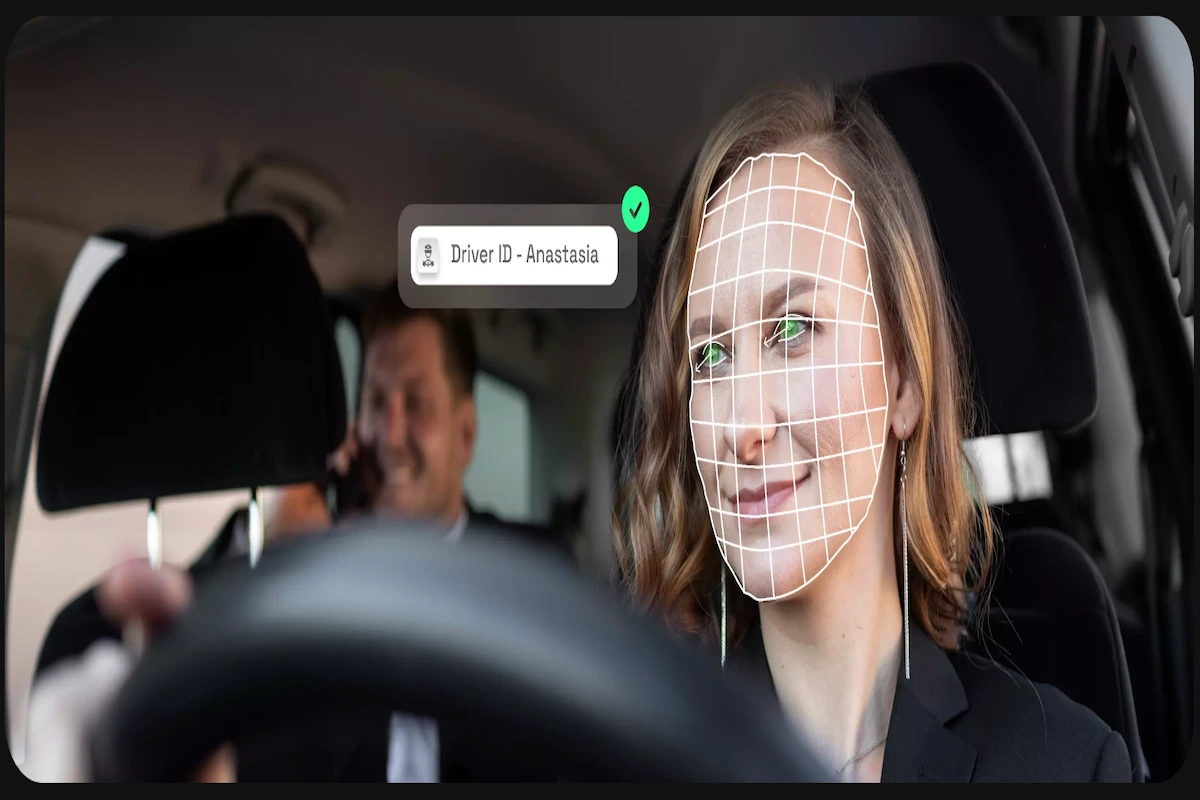

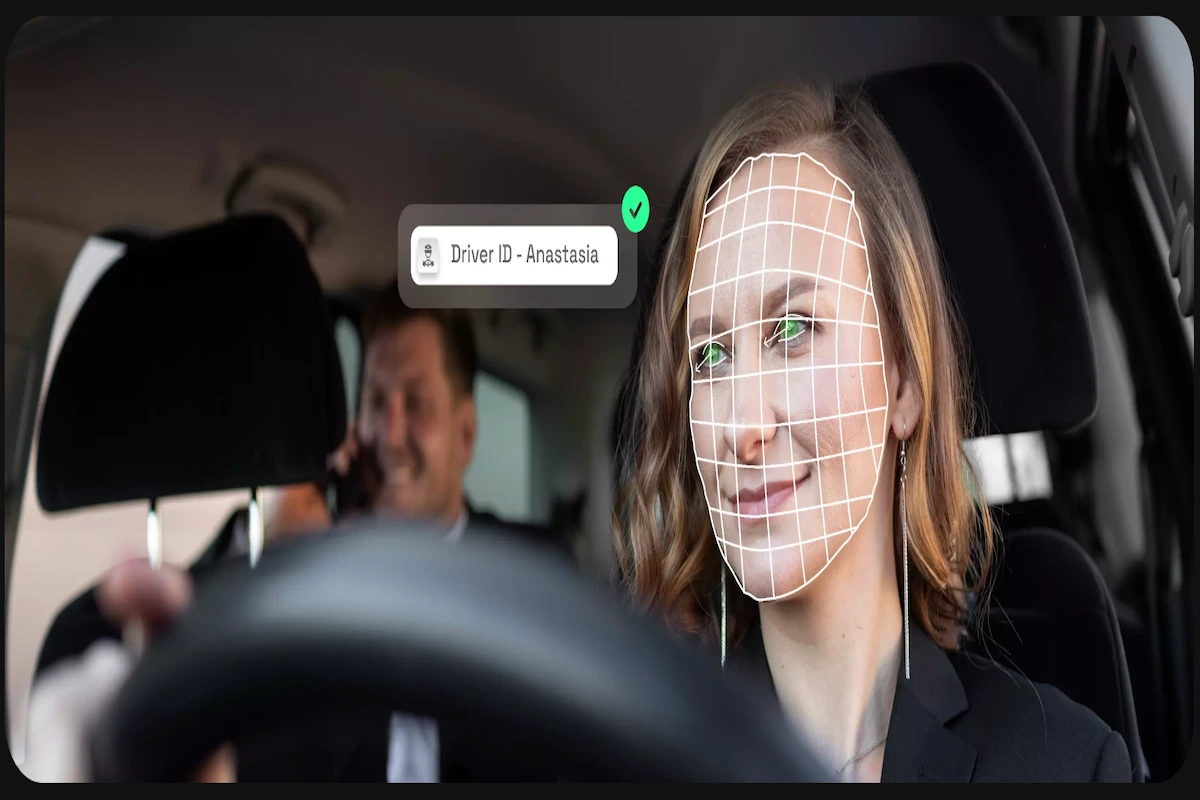

In the realm of autonomous vehicles, visual training data plays a pivotal role in teaching computer models to navigate not only the external environment but also to understand and respond to internal factors. Image datasets include annotated images of road scenes, featuring labeled objects like pedestrians, vehicles, and traffic signs. These datasets are essential for training models to recognize and respond to diverse elements in the driving environment.

In the realm of autonomous vehicles, visual training data plays a pivotal role in teaching computer models to navigate not only the external environment but also to understand and respond to internal factors. Image datasets include annotated images of road scenes, featuring labeled objects like pedestrians, vehicles, and traffic signs. These datasets are essential for training models to recognize and respond to diverse elements in the driving environment.

Moreover, video datasets capture a range of scenarios, including driver activities within the vehicle. These videos provide insights into various driver behaviors, helping autonomous systems understand and respond to human actions, enhancing the overall safety and adaptability of self-driving vehicles.

By incorporating comprehensive visual training data, autonomous vehicles become adept at interpreting both external road conditions and internal driver activities, ensuring a holistic approach to safe and efficient navigation.

Visual Training Data for Agriculture

In the agricultural domain, visual training data is a crucial resource for teaching computer models to understand and optimize various aspects of farming practices. Image datasets in agriculture encompass annotated images of crops, fields, and plants, aiding in tasks like crop monitoring and disease detection. These datasets are fundamental for training algorithms to recognize patterns related to crop health, growth stages, and potential issues.

Visual Training Data for Finance

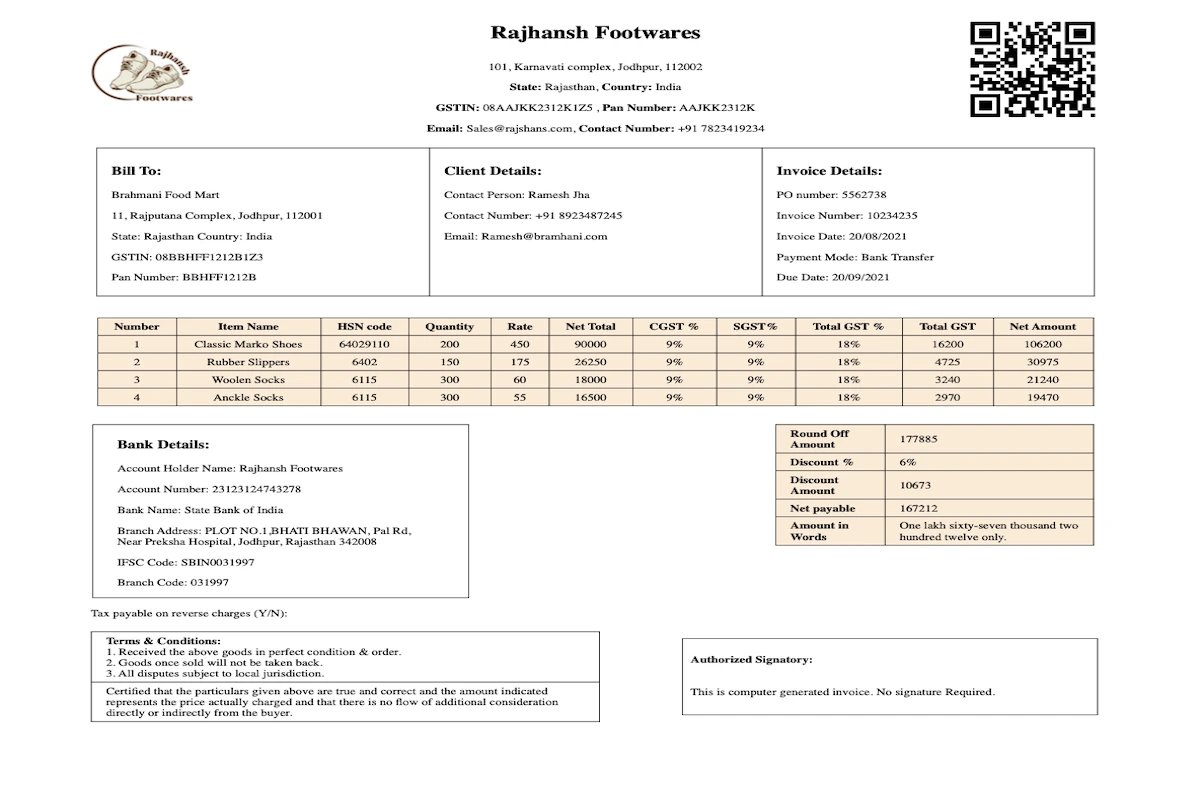

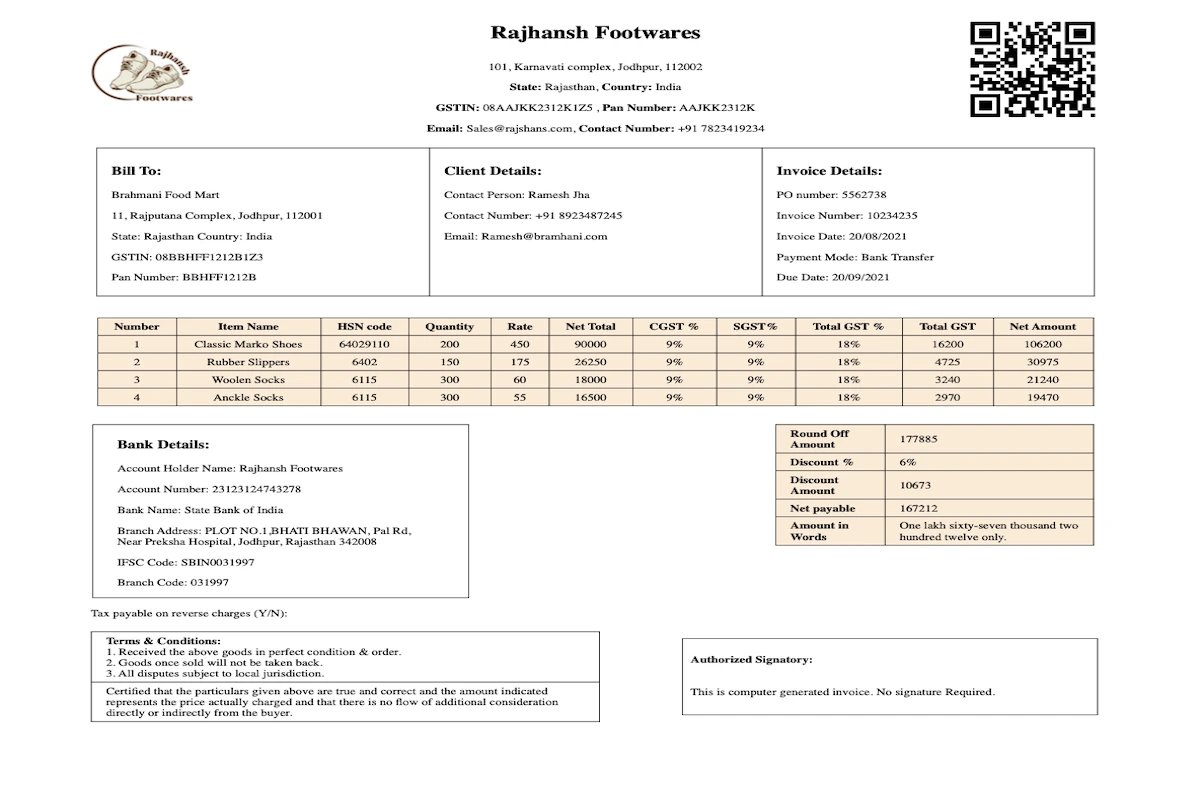

In the finance sector, visual training data plays a crucial role in document processing through computer vision, particularly in text recognition applications. Image datasets include various financial documents, such as invoices, receipts, and statements, meticulously annotated to train models for accurate text extraction. These datasets are essential for teaching algorithms to recognize and interpret different fonts, layouts, and languages commonly found in financial paperwork.

In the finance sector, visual training data plays a crucial role in document processing through computer vision, particularly in text recognition applications. Image datasets include various financial documents, such as invoices, receipts, and statements, meticulously annotated to train models for accurate text extraction. These datasets are essential for teaching algorithms to recognize and interpret different fonts, layouts, and languages commonly found in financial paperwork.

By leveraging comprehensive visual training data in finance, computer vision systems become adept at automating tasks related to document processing, streamlining operations, reducing errors, and improving the overall efficiency of financial processes.

Visual Training Data for Safety and Surveillance

In the realm of safety and surveillance, the importance of visual training data lies in its ability to teach computer systems to understand and respond to various visual cues. For instance, image datasets consist of carefully labeled pictures, helping models recognize specific objects or individuals in different scenarios. These datasets might include images of people wearing safety gear or potential threats.

On the video side, datasets capture real-time footage, providing a continuous stream of information for the models to learn from. Whether it's monitoring crowded places for unusual behavior or tracking movements in industrial settings, these datasets serve as a foundation for training models to identify and respond to potential risks.

On the video side, datasets capture real-time footage, providing a continuous stream of information for the models to learn from. Whether it's monitoring crowded places for unusual behavior or tracking movements in industrial settings, these datasets serve as a foundation for training models to identify and respond to potential risks.

Similarly, we can have video and image training data for real estate that can be helpful to check property conditions. Other than industry specific handwritten text, image datasets are helpful in building AI models that can transcribe handwritten text.

We will continue this blog by introducing new industry specific visual datasets.

FutureBeeAI: Visual Training Data Provider

We offer custom and industry specific video and image training data collection services, as well as ready to use 200+ datasets for different industries. Our datasets includefacial training data, driver activity video data, human historical images, handwritten and printed text images, and many others. We can also help you label and annotate your image and video data.

We have built some SOTA platforms that can be used to prepare the data with the help of our crowd community from different domains.

You can contact us for samples and platform reviews.

In the realm of autonomous vehicles, visual training data plays a pivotal role in teaching computer models to navigate not only the external environment but also to understand and respond to internal factors. Image datasets include annotated images of road scenes, featuring labeled objects like pedestrians, vehicles, and traffic signs. These datasets are essential for training models to recognize and respond to diverse elements in the driving environment.

In the realm of autonomous vehicles, visual training data plays a pivotal role in teaching computer models to navigate not only the external environment but also to understand and respond to internal factors. Image datasets include annotated images of road scenes, featuring labeled objects like pedestrians, vehicles, and traffic signs. These datasets are essential for training models to recognize and respond to diverse elements in the driving environment. In the finance sector, visual training data plays a crucial role in

In the finance sector, visual training data plays a crucial role in  On the video side, datasets capture real-time footage, providing a continuous stream of information for the models to learn from. Whether it's monitoring crowded places for unusual behavior or tracking movements in industrial settings, these datasets serve as a foundation for training models to identify and respond to potential risks.

On the video side, datasets capture real-time footage, providing a continuous stream of information for the models to learn from. Whether it's monitoring crowded places for unusual behavior or tracking movements in industrial settings, these datasets serve as a foundation for training models to identify and respond to potential risks.