How can LLMs be trained on specialized domains like medicine or law?

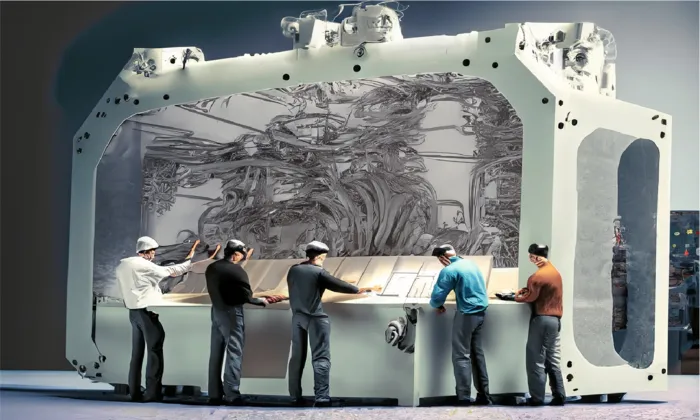

Domain Specific Data

LLM

Fine Tuning

Large Language Models (LLMs) can be trained on specialized domains like medicine or law by: 1. Domain-specific datasets: Using datasets specific to the domain, such as medical articles or legal texts. 2. Fine-tuning: Fine-tuning pre-trained LLMs on domain-specific datasets to adapt to the new domain. 3. Domain adaptation: Using techniques like transfer learning, multi-task learning, or prompt engineering to adapt the LLM to the new domain. 4. Expert annotations: Using expert annotations to label data and provide high-quality training examples. 5. Domain-specific pre-training: Pre-training the LLM on a large corpus of domain-specific text before fine-tuning on a specific task. 6. Multitask learning: Training the LLM on multiple tasks simultaneously, such as medical text classification and question answering. 7. Using domain-specific vocabulary: Incorporating domain-specific vocabulary and terminology into the LLM's training data. By leveraging these strategies, LLMs can be effectively trained on specialized domains like medicine or law, enabling them to provide accurate and informative responses to domain-specific queries.

What Else Do People Ask?

Related AI Articles

Browse Matching Datasets

Acquiring high-quality AI datasets has never been easier!!!

Get in touch with our AI data expert now!