We Use Cookies!!!

We use cookies to ensure that we give you the best experience on our website. Read cookies policies.

Computer vision is a technology for today and the future that is revolutionizing industries. Over the years, humans have developed many computer vision models that can interpret our visual world. We all have heard of object detection software, self-driving cars, facial recognition software, medical imaging, etc.

Amazon GO stores, for instance, use computer vision to keep track of constantly changing inventory levels. This makes it possible for Amazon to manage all the moving parts required to support its friction-free shopping process with zero cashiers required.

Organizations use computer vision facial recognition techniques for KYC-like applications. This makes it more convenient for organizations to onboard their customers, and people don’t have to reach physical offices for the same.

It is very obvious that computer vision technology has the potential to revolutionize a wide range of industries, but the key to any successful computer vision model is a structured training dataset.

So let's discuss what makes a robust computer vision AI model in this article. We'll begin with the fundamentals: defining computer vision, understanding data annotation, and discussing various types of data labeling and annotation.

In simple words, "computer vision" is a field of artificial intelligence and computer science that aims to enable computers to understand and interpret visual data from the world around them, just as humans do. It involves the development of algorithms and systems that can automatically analyze, understand, and interpret images and video data.

Some key techniques and technologies used in computer vision include image and video processing, machine learning, deep learning, and pattern recognition. These techniques are used to extract and analyze features from visual data and make predictions or decisions based on that analysis.

This can include tasks such as object recognition, image classification, scene understanding, and many more. It has a wide range of applications, including self-driving cars, image search engines, and facial recognition systems. However, computer vision requires a high-quality and structured training dataset, which necessitates data annotation, in order to effectively understand our visual world. Data annotation allows algorithms to accurately interpret and understand visual data and make predictions or decisions based on a given analysis.

An essential step in the creation and training of computer vision algorithms and systems is data annotation. The process of labeling and adding metadata to data in various formats, such as text, images, or video, so that machines can understand it is known as data annotation. There are various types of data annotation techniques that can be used, depending on the specific needs of the machine learning task at hand. Similarly, for any computer vision application to perform a specific task, data annotation is crucial in order to understand the features associated with that task.

To comprehend the visual world around the model, computer vision technology needs to be fed with image and video data and to make the data structured, we need to perform image annotation or video annotation.

Image annotation is the process of labeling and categorizing images in a structured way in order to provide context and meaning to the data. In this process, we label the objects of interest in the images with specific labels so the model can build patterns and develop an understanding of the object. Image annotation can be used for a wide range of applications, including facial recognition, image classification, medical image analysis, defect inspection , etc., that can transform various sectors and industries around us.

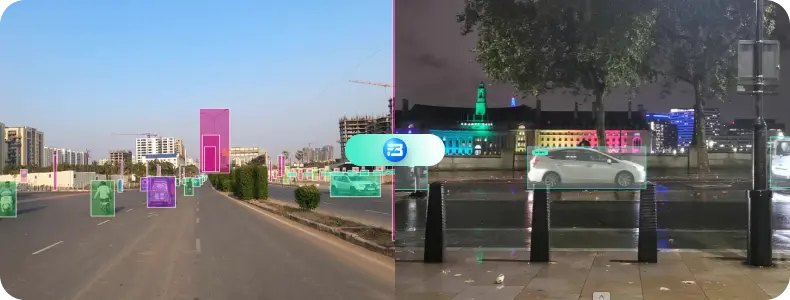

Video annotation is the process of labeling and categorizing video data in a structured way in order to provide context and meaning to the data. Video annotation can be used for a wide range of applications, including object tracking, activity recognition, and event detection. It involves labeling specific objects or regions within a video frame and tracking those objects or regions over time.

Image and video annotation can be done with many techniques, depending on the data and complexity of the use cases.

This is the simplest annotation type; in it, annotators create a box around the object of interest at a particular frame and location. The box should be as close to every edge of the object as possible. 2D bounding boxes are generally used for simple object shapes, and they can be done quickly compared to other methods.

One specific application of bounding boxes would be autonomous vehicle development.

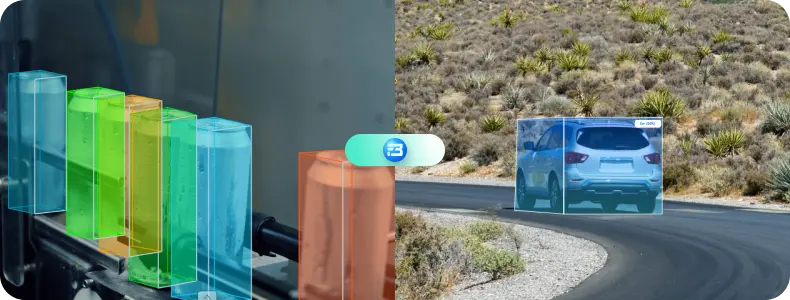

A 3D bounding box is also known as a cuboid. It is similar to a 2D bounding box; the only difference is that a 3D bounding box also covers the depth of objects, while a 2D bounding box only covers length and width. With 3D cuboid annotation, human annotators draw a box encapsulating the object of interest and place anchor points at each of the object’s edges.

3D bounding boxes can also be used for autonomous vehicle development.

Many times, 2D and 3D bounding can't fit some objects because of their irregular, odd shapes and orientation with the image, so the polygon comes in handy. Polygons are created around the objects by joining dots or by using a brush. This can be used when developers want more precise results for use cases like medical cancer identification, identifying different parts in one object, detecting defects on a building or car, etc.

Polyline annotation is a fantastic annotation technique. As its name suggests, annotators simply draw lines along the boundaries you require your machine to learn. Lines and splines can be used to train warehouse robots to accurately follow any path, and they can also be used to train autonomous vehicles to understand boundaries and stay in one lane without veering.

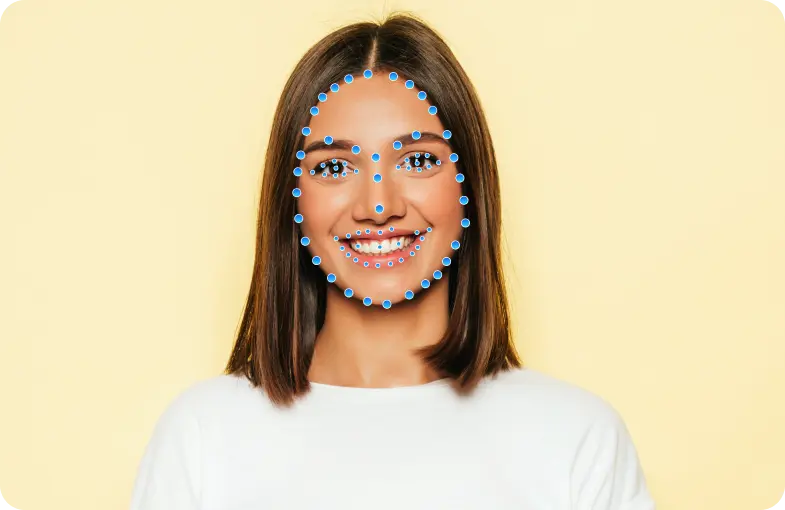

Keypoint annotation, also known as landmark annotation or dot annotation, is the process of identifying and labeling specific points of interest on an object in an image. These points are often referred to as "keypoints" or "landmarks." Keypoint annotation is commonly used in machine learning and computer vision tasks such as pose estimation, facial recognition, exploring the relationship between different body parts, and object tracking.

Skeleton annotation is the process of identifying and labeling the key points or joints on a human or animal body in an image or video. We can also defined this as a network of keypoints connected by vectors.

The goal of skeleton annotation is to enable the detection and tracking of human or animal bodies in an image or video sequence. This can be used for a variety of purposes, such as analyzing human or animal movement, analyzing posture, or identifying specific actions or gestures.

To annotate a skeleton, annotators typically identify and label the key points or joints on a human or animal body, such as the head, shoulders, elbows, wrists, hips, knees, and ankles. These keypoints are then used to create a skeleton, which represents the underlying structure of the body.

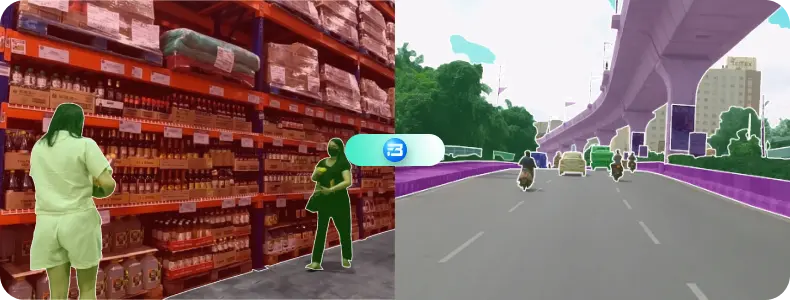

Semantic segmentation can be defined as grouping of same parts or pixels of any target object in a given image or clustering parts of an image together which belong to the same object class. In this segmentation all the parts with same class will get same pixel value. Let’s understand this with an example: Suppose that there are people in an image you going to annotate. Semantic segmentation will detect person as object and in this case all person belongs to the same class and will get same pixel value for different intances.

The above-explained types of annotation give identity to big data and make them usable for training AI models, but there may be some more methods to annotate data as per specific cases, and you may have the question of which data annotation is right for me. These things depend on the specific use case.

Annotation can be done manually or automatically. We can use pre-trained models to automatically label new unlabeled data, but for complex data, such as in medical use cases, manual annotation is preferred.

Let’s assume that your team has already gathered 10,000 animal images from FuturebeeAI for training your model to recognize different animals. The next step is annotating the dataset, because it is not enough to just show your model images that contain animals. These images also contain other things: backgrounds, other objects like trees or people, and any number of other distractions.

So, your model needs to identify which part of each image contains an animal and which animal any particular image has. This added information in the raw data obtained using the annotation technique helps the model learn and understand all the features around that task. In the process of building any robust computer vision model, you as a developer need to identify the different features the AI model needs to deal with, and based on that, you have to select the labels and type of annotation to do. Type of annotation and number of labels to focus is very subjective and it depends on the type of solution you are building and complexity of the task.

In modern computer vision, the most crucial bottleneck for AI developers is a lack of effective, structured, and scalable training data. Most computer vision AI models are based on supervised machine learning models. Supervised models require labeled data in huge quantities. Traditionally, obtaining these datasets involves two main stages: data gathering and data annotation.

Obsessed with helping AI developers over 5 years in the industry, we here at FutureBeeAI thrive on world-class practices to deliver annotation solutions in every stage of AI dataset requirements, from selecting the right type of data and structuring unstructured data to stage-wise custom data collection and pre-labeled off-the-shelf datasets.

We hope you have a better understanding of data annotation for computer vision-based AI development!

Book a call with our data acquisition manager for spam-free advice right now!