We Use Cookies!!!

We use cookies to ensure that we give you the best experience on our website. Read cookies policies.

What can be our objective with any machine learning model? The fundamental objective of any machine learning model is to learn from data and generalize that learning to make accurate predictions or decisions on new data. In other words, the goal of a machine learning model is to identify patterns or relationships in the training data that can be used to make predictions or decisions based on new, unseen data. That’s all that we want from any machine-learning model, right?

But when any machine learning model fails to achieve this objective, one of the most common reasons is overfitting. Although we have explored overfitting and underfitting in detail in our blog, “The Simplest Guide on Overfitting and Underfitting," let’s quickly recap it and then explore some obvious but mainly overlooked ways to prevent it from happening.

Overfitting is an undesirable consequence of machine learning, where the machine learning model doesn’t perform its fundamental objective of making an accurate prediction on new, unseen data.

While training any machine learning model, whether it is a speech recognition model, a computer vision model, or a natural language processing model, data scientists feed the particular model with an unbiased and diverse labeled training dataset.

It is expected that in this training phase, the model will identify the patterns and relationships in the training dataset and learn how to make predictions when it encounters new, real-world, unseen datasets.

It is a phenomenon in which the machine learning model becomes too closely tailored or "over-adapted" to the training data it was trained on, resulting in poor generalization to new, unseen data. When such a trained model performs very well on the training dataset but performs poorly on unseen datasets, this occurrence is referred to as "overfitting."

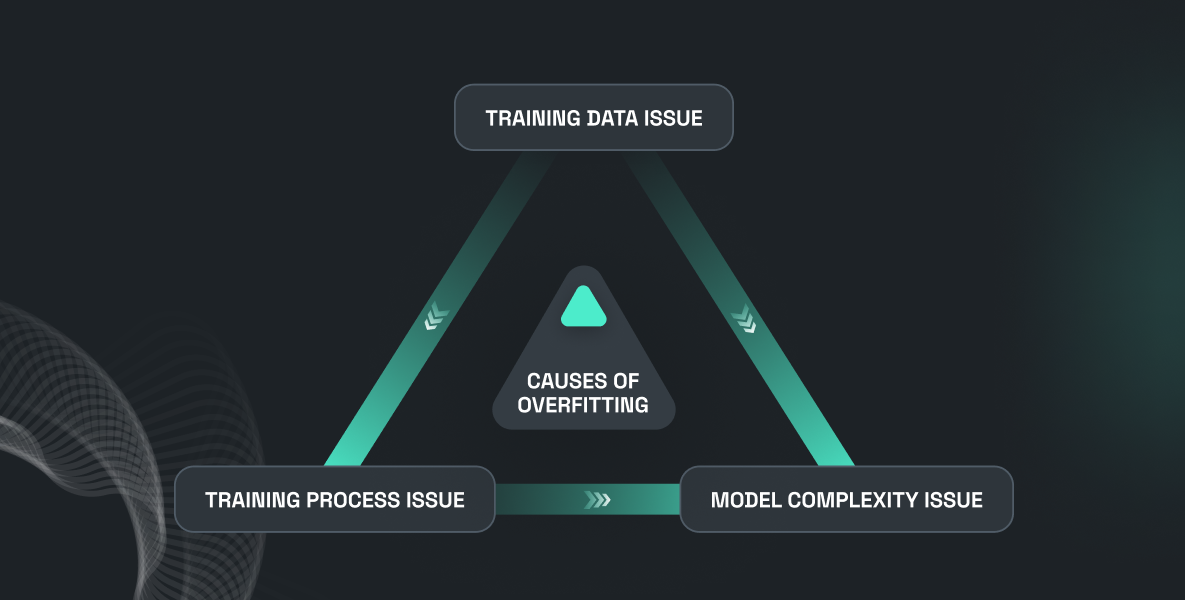

Before we explore potential ways to overcome or prevent it, we need to understand why it happens at the first step, right?

So the common reasons for overfitting can be related to training data, the training process, and model complexity.

●When the model is trained on a small set of training data, it cannot learn enough or form a prediction pattern to generalize to new data.

Let’s say we want to build a dog detection model, but if we train the model with 50 images of dogs that too are similar in type, then it is obvious that such a trained model will not perform accurately on new data. That’s how overfitting occurs in object detection models.

●When the training dataset contains too much irrelevant and biased information. In that case, the model builds some unnecessary patterns based on such noisy data. Such a model will perform poorly because it cannot generalize new data.

Let’s say we want to build a facial recognition model that can recognize any individual around the globe. Now, if to train this model we give only specific demographic facial images as training data, that too with similar lighting and background conditions, then such a biased dataset will not help such a facial recognition model generalize and perform well when encountered with a different demographic facial image

●Training time is also an important aspect. If any model is trained on a single dataset for too long, then rather than learning the underlying patterns and relationships the model starts to memorize the data, and that leads to overfitting.

●When the selected model is too complex with too many parameters and when such a model is trained on any dataset it is easy for such a model to learn not only the underlying patterns and relationships in the training data but also the noise and random fluctuations in the data

These are the most common reasons why overfitting occurs. Now let’s explore what ways we have to prevent overfitting from happening.

Let’s group all these solutions in terms of training data, training process, and model complexity.

Training data quality directly impacts the machine learning model's accuracy. We need to make sure that the training dataset that we are using is of high quality and has enough volume.

The labeled dataset that we are feeding to the machine learning model must contain all the parameters on which we need to train our model. It is important to ensure that the dataset is unbiased and representative of the real-world data to which the model will be applied. Biased datasets can lead to models that are overfitted to certain types of data or underperform on certain subgroups of the data.

A large and diverse dataset can help the model learn the underlying patterns and relationships in the data without memorizing the data itself. By including a wide range of examples in the dataset, the model is less likely to become overfitted to any one specific type of data or feature. Training models on high-quality datasets is the most obvious way to prevent overfitting.

If we want to train our object identification model to identify chairs and a table, then we need to make sure that our training dataset contains enough images of different types of chairs and tables in different conditions, orientations, and backgrounds.

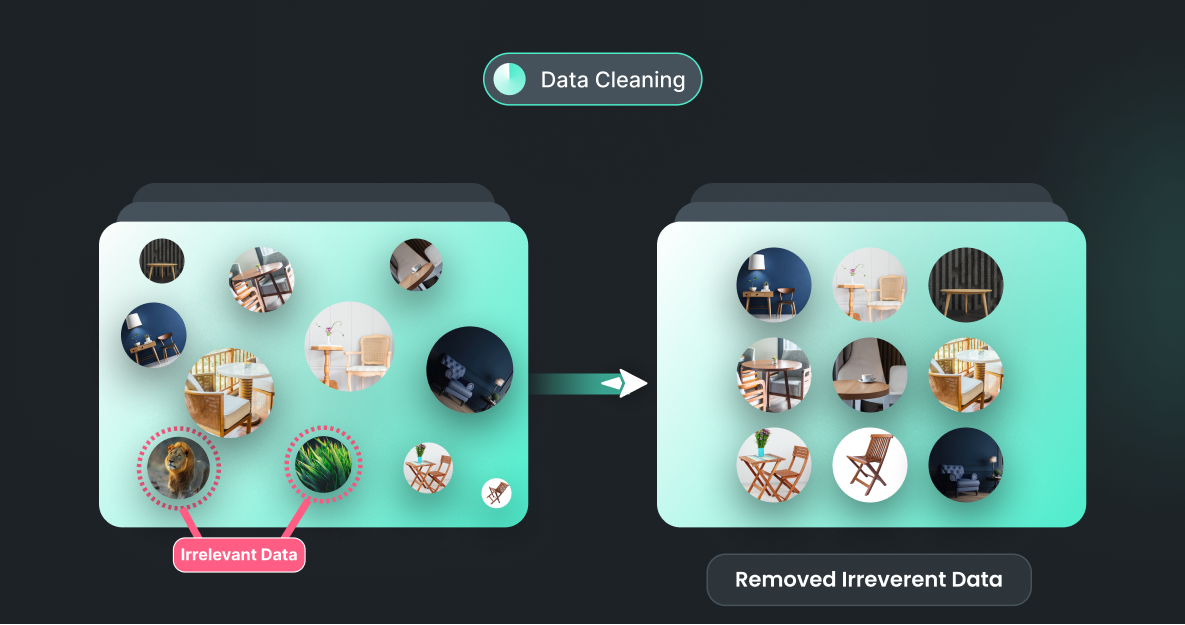

You might be using any open source dataset or collecting your own dataset. No matter how clear your guidelines and expectations were, there is a high chance that you ended up getting some irrelevant data.

Taking our chair and table detection example forward, let’s say you collected lots of images of tables and chairs in the garden, office, and rooms. Now our focus here is on chairs and tables, right? But let’s say there are some images in which only the grass of the garden is there, or only the office wall is there, or maybe images of kids playing at home. It can happen that while clicking the image of a table and chair, there are some frames in which our target item is not there.

Such irrelevant images won’t help us achieve the objective, and when the model is trained on such images, it may lead to poor performance in real-life scenarios and cause overfitting.

So it is better to have a post-process after collecting your own data or maybe after acquiring any open source or off-the-shelf dataset to remove irrelevant data to prevent overfitting.

It is not feasible to collect more and more training data all the time due to so many containers, as data collection can be costly and time-consuming. In such cases, data augmentation can help enlarge existing datasets without collecting new data.

For the table and chair detection model, we can enlarge the existing image dataset by flipping, rotating, cropping, or shearing the existing images. Data augmentation is not limited to image datasets only, even for natural language processing tasks, we can use techniques like back-translation, word replacement, or adding noise to the text to enlarge the existing text data.

Data augmentation helps us increase not only the size of the dataset but also its diversity. This additional data variation can help the model learn more robust and generalizable features, reducing its tendency to overfit the original training data. In other words, data augmentation makes the model more flexible and less sensitive to minor variations in the input data, which can result in better performance on new, unseen data.

As explained in the blog, “All About Training Datasets for Machine Learning ” we need to split the available training dataset into training, validation, and testing rather than just training the model on the entire dataset and then checking luck with real-life data.

Training dataset splitting is an important technique used to prevent overfitting in machine learning. In this, the training set is used to train the model, the validation set is used to tune the hyperparameters and prevent overfitting, and the testing set is used to evaluate the model's performance on new, unseen data.

By separating the data in this way, the model is prevented from memorizing the training set and overfitting it. The validation set helps to ensure that the model is not overfitting to the training data by measuring its performance on a different dataset. If the model performs well on the training set but poorly on the validation set, it is an indication that it is overfitting the training set.

Furthermore, the testing set provides a final evaluation of the model's performance on completely new and unseen data. This helps to ensure that the model is able to generalize well to new data and is not simply memorizing patterns from the training set.

When you are relying on a smaller dataset, in that case, you can explore an alternative option for dataset split, which is cross-validation. Cross-validation is a similar technique to training dataset splitting, but in this approach, we split the available data into K equally sized partitions, or "folds".

The model is then trained and evaluated K times, each time using a different fold for validation and the remaining K-1 folds for training. The K evaluation scores are then averaged to produce a single estimate of model performance.

Different models based on different hyperparameters are trained and validated in a similar manner, and then the model that achieves the highest average performance is selected.

Despite being comparatively more computationally expensive than simple dataset splitting, cross-validation is a great technique to prevent overfitting and find optimal hyperparameters.

Early stopping is a powerful technique used to prevent overfitting in machine learning models. Early stopping helps the model avoid learning the noise or irrelevant patterns in the training dataset.

As discussed earlier, we are splitting the available dataset into training, validation, and testing. Now the model’s performance is being measured on the validation dataset after each epoch or a certain number of training iterations. To measure the model’s performance, we calculate metrics like mean squared error (MSE) or mean absolute error (MAE).

Generally, at the initial level, models learn from the training dataset, and their performance improves on the validation dataset. However, at a certain point, further training causes the model to start over-optimizing the training data and perform worse on the validation set.

So at this point, when the validation loss or error starts to increase or no longer improves significantly, we halt the training process, and this technique is referred to as “early stopping.”

Apart from helping prevent the model from fitting closely on the training dataset and improving the generalization ability, early stopping is helpful in various other ways as well. It helps in saving computational resources and time in training models, which is not contributing to the model’s performance improvement.

Early stopping is one of the most simple and intuitive approaches to preventing overfitting. It does not require any complex methods or hyperparameter tuning in it.

Each layer in the neural network introduces some sort of learnable parameter. The more layers, the more complex the model will be, and the complex model will try to fit closely and try to remember noise and capture some irrelevant patterns from the training dataset.

So the idea is very simple, remove some layers from the neural network and reduce the model's complexity. Deep neural networks with a large number of layers have a higher capacity to learn intricate details in the training data. While this can be beneficial for certain complex tasks, it can also make the model more susceptible to overfitting. By removing layers, we decrease the model's capacity and constrain its ability to fit complex and noisy patterns in the training data.

Apart from that, a simpler model with fewer layers is more likely to capture the essential underlying patterns in the data rather than the noise or specific idiosyncrasies of the training set. This improved generalization ability allows the model to perform better on unseen data.

Along with all these benefits, of course, it helps in making the training process more efficient. This can save computational resources and training time.

Now the very interesting thing here is to define which layer to remove! There are several ways, and there is no one size that fits all. We can decide the importance and contribution of each layer to the overall model performance and remove the layer that has less impact on the overall model performance. We can also analyze some layers which are not dependent on any other layer and their output is not very crucial so we can remove such layers as well. Another approach we can opt for is validation performance. We can remove different layers at different times and see how the model is performing and remove the layer which is not contributing enough to the model’s performance. So there are various approaches to choose from and that depends on your particular use case only!

Dropout is similar to layer removal, but in this case, we are randomly deactivating or dropping out certain neurons, unlike removing an entire layer. Dropout is a regularization technique that can be highly effective in preventing overfitting in machine learning models.

When the neural network consists of a large number of neurons it means it has lots of parameters where certain neurons become very dependent on the presence of specific other neurons. This refers to co-adaption. This can lead to overfitting, as these neurons may only work well when certain other neurons are present. Dropout breaks up these dependencies by randomly deactivating neurons during training. This forces the network to learn more robust and independent representations, preventing overfitting to specific combinations of neurons.

During each training iteration, a different set of neurons is dropped out, resulting in different subnetworks being trained. This ensembling effect helps the model to generalize better by averaging the predictions of these subnetworks at inference time. It acts as a form of model averaging, which tends to reduce overfitting and improve generalization performance.

Also, when dropout is applied, each neuron must be able to make accurate predictions even when some of its neighboring neurons are missing. This encourages the network to distribute the learned information across multiple neurons, making the model more robust to the absence of specific neurons during inference.

In the end, of course, dropping out will offer computational benefits. During training, only a fraction of the neurons are active, reducing the number of computations required for each forward and backward pass. This results in faster training compared to using the full model without dropout. Furthermore, dropouts' regularization effect often reduces the need for other forms of regularization or early stopping, simplifying the training process.

L1 and L2 regularization are two common regularization techniques used in machine learning models to prevent overfitting and improve generalization. They are regularization terms added to the loss function during training to penalize large parameter values.

Let’s assume we have a linear regression model represented by the equation: y = w1 * X1 + w2 * X2. where y is the target variable, w1, and w2 are the coefficients (weights), and X1 and X2 are the input features.

L1 regularization, also known as Lasso regularization, adds a penalty term to the loss function proportional to the absolute value of the model's parameters. The loss function with the L1 penalty term would be

Loss = (1/N) * ∑(y_true - y_pred)^2 + λ * (|w1| + |w2|)

where N is the number of data samples, y_true is the true target value, y_pred is the predicted value, and λ is the regularization parameter.

Let's assume that during training, the L1 regularization pushes the model to select the most important feature, and the coefficients become:

w1 = 2.0 and w2 = 0.0

L1 regularization encourages the model to reduce the impact of less important features by driving their corresponding weights toward zero. This can lead to sparse feature selection, where some weights become exactly zero, effectively removing the corresponding features from the model. L1 regularization is useful when the dataset contains many irrelevant or redundant features, as it can help improve model interpretability and reduce overfitting.

L2 regularization, also known as Ridge regularization, adds a penalty term to the loss function proportional to the square of the model's parameters. The loss function with the L2 penalty term would be:

Loss = (1/N) * ∑(y_true - y_pred)^2 + λ * (w1^2 + w2^2)

Assuming that L2 regularization is applied and the coefficients become

w1 = 1.5 and w2 = 0.8

L2 regularization encourages the model to spread the impact of features more evenly by reducing the magnitudes of all the parameters. It does not promote sparsity like L1 regularization, as the weights do not become exactly zero unless explicitly optimized. L2 regularization is particularly useful when the dataset contains correlated features or when dealing with ill-conditioned problems. It helps to stabilize the model and reduce the sensitivity to small changes in the input data, thereby preventing overfitting.

I hope our extensive discussion on preventing overfitting has been helpful in achieving your machine learning model goals. Throughout this discussion, we've seen that planning the training process and controlling model complexity can be effectively managed in-house with the expertise of AI developers.

However, when it comes to the complex task of acquiring high-quality training data, there are numerous factors to consider, such as diversity, unbiasedness, project planning, annotation, and more. Managing all these aspects can be challenging and time-consuming.

At FutureBeeAI, we specialize in providing diverse, unbiased, and well-structured datasets from all around the world. By partnering with us for your training dataset needs, you can save valuable time and resources. Our expertise in dataset acquisition and management ensures that you have access to the data you need to build robust and reliable models.

Contact us today to discuss your specific use case and let us help you take your machine-learning projects to the next level!